Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It organizes containers into logical units for simplified management and discovery.

Kubernetes offers a platform to schedule and run containers across clusters of physical or virtual machines. By abstracting the underlying infrastructure, it ensures portability across both cloud and on-premises environments. Key features include service discovery, load balancing, secret and configuration management, rolling updates, and self-healing capabilities.

Kubernetes supports microservices architecture in several ways:

- It provides a robust foundation for deploying and running microservices.

- It offers essential services like service discovery and load balancing, which are crucial for a microservices architecture.

- It includes tools and APIs for automating the deployment, scaling, and management of microservices.

6 best practices for running Microservices on Kubernetes

Here are some key strategies to deploy microservices on Kubernetes more effectively:

1. Streamline traffic management with Ingress

Effectively managing traffic within a microservices architecture can pose challenges. With numerous independent services, each with its own distinct endpoint, directing requests to the correct service becomes intricate. This is where Kubernetes Ingress steps in.

Ingress, an API object, facilitates HTTP and HTTPS routing to services within a cluster based on host and path specifications. Essentially functioning as a reverse proxy, it directs incoming requests to the appropriate service. This enables the exposure of multiple services under a single IP address, simplifying your application's architecture and enhancing manageability.

Moreover, apart from streamlining routing, Ingress offers additional functionalities such as SSL/TLS termination, load balancing, and name-based virtual hosting. These features contribute significantly to enhancing the performance and security of your microservices application.

2. Leverage Kubernetes to scale microservices

One of the key advantages of adopting a microservices architecture lies in the flexibility to scale individual services independently. This capability enables you to allocate resources more effectively and manage variable workloads with precision. Kubernetes offers a suite of tools tailored to empower your microservices scalability.

One such tool is the Horizontal Pod Autoscaler (HPA). The HPA dynamically adjusts the number of pods within a deployment based on observed CPU utilization or, with custom metrics support, on any other application-specific metrics. This ensures your application can adeptly adapt to fluctuating loads, guaranteeing optimal resource allocation to address incoming requests.

Moreover, Kubernetes facilitates manual scaling, granting you the ability to manually increase or decrease the number of pods within a Deployment as needed. This proves invaluable for anticipated events, such as a marketing campaign, where a temporary surge in workload is anticipated.

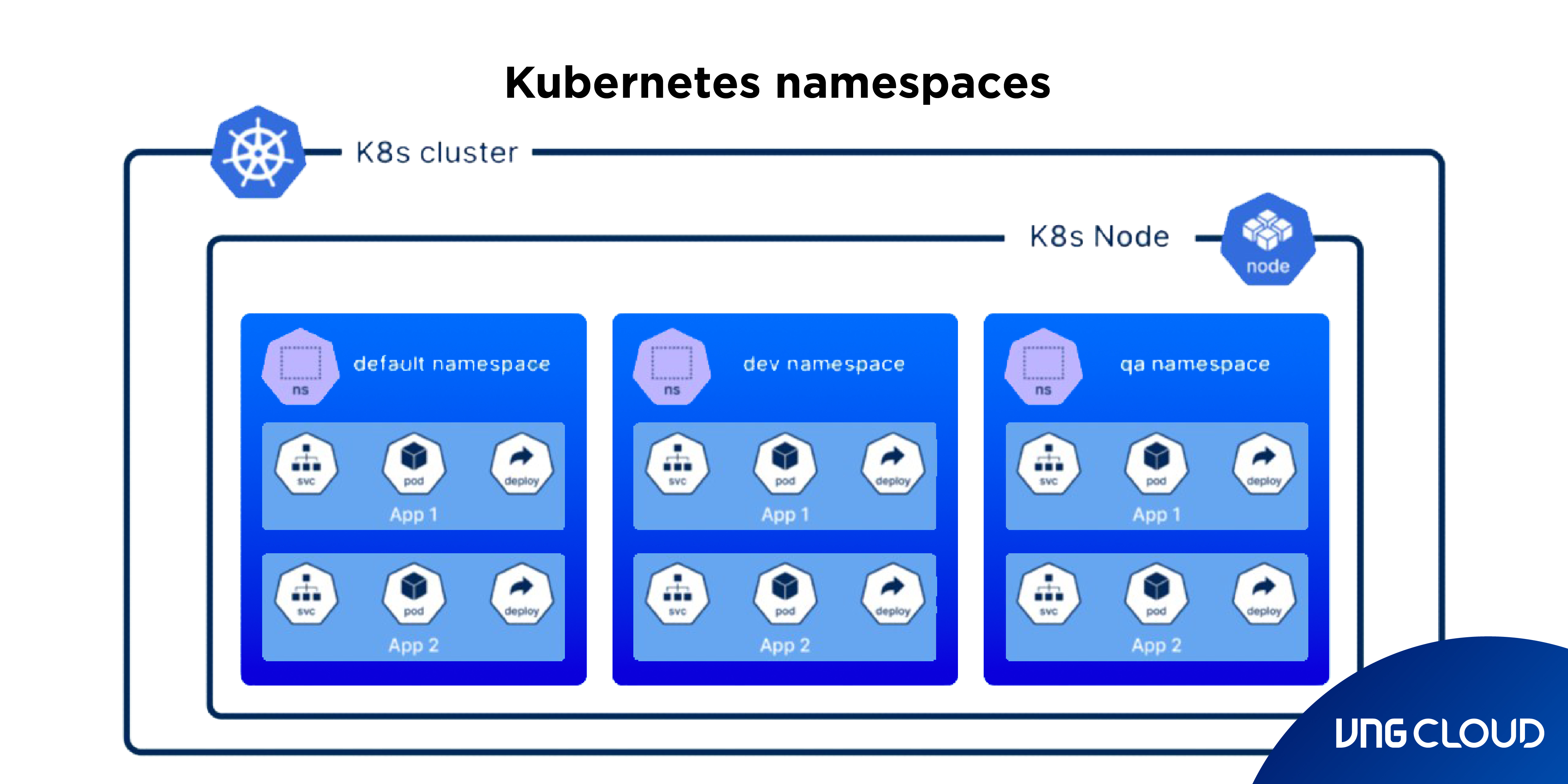

3. Use namespaces

Effective organization is crucial in managing large and complex applications. Kubernetes namespaces offer a solution for partitioning cluster resources among multiple users or teams. Each namespace establishes a distinct scope for names, ensuring that resource names within one namespace do not conflict with those in others.

Leveraging namespaces can significantly streamline the administration of your microservices. By grouping interconnected services within the same namespace, you can administer them collectively, implementing policies and access controls at the namespace level.

4. Implement health checks

Incorporating health checks is necessary for your microservices. Health checks serve as a mechanism to monitor the status of your services, ensuring they operate as intended.

Kubernetes offers two types of health checks: readiness probes and liveness probes. Readiness probes assess whether a pod is prepared to handle requests, whereas liveness probes assess whether a pod is actively running.

These health checks play a pivotal role in sustaining a robust and agile application. They empower Kubernetes to automatically replace malfunctioning pods, safeguarding the availability and responsiveness of your application.

5. Use service mesh

A service mesh functions as a dedicated infrastructure layer designed to facilitate service-to-service communication within a microservices framework. Its primary role is to ensure the dependable delivery of requests across the intricate network of services comprising a microservices application.

For microservices on Kubernetes, a service mesh offers numerous advantages, encompassing traffic management, service discovery, load balancing, and failure mitigation. Additionally, it delivers robust functionalities such as circuit breakers, timeouts, retries, and more, which are instrumental in upholding the stability and efficiency of your microservices.

While Kubernetes inherently provides some of these functionalities, a service mesh elevates the capabilities to a higher plane, affording meticulous control over service interactions. Whether you opt for Istio, Linkerd, or another service mesh platform, it serves as a potent asset within your Kubernetes toolkit.

6. Design each microservice for a single responsibility

Embracing the single responsibility principle when designing microservices is fundamental to a successful microservices architecture, especially in a Kubernetes deployment. This principle fosters cohesion and facilitates a clear separation of concerns.

When deploying microservices on Kubernetes, assigning a distinct responsibility to each service streamlines scalability, monitoring, and administration. Kubernetes empowers you to define varied scaling policies, resource allocations, and security settings tailored to each microservice. Adhering to a single-responsibility approach allows you to leverage these capabilities fully, customizing the infrastructure to meet the unique requirements of each service.

Moreover, a design centered around singular responsibilities simplifies troubleshooting and upkeep. When troubleshooting arises, pinpointing and resolving issues in a service with a well-defined, singular role becomes much more straightforward. This proves especially advantageous in a Kubernetes ecosystem where logs, metrics, and debugging data can become extensive and intricate due to the distributed nature of the system.

How to manage and maintain microservices with Kubernetes

1. Deploying microservices to Kubernetes

Deploying microservices on Kubernetes usually entails setting up a Kubernetes Deployment (or a comparable object like a StatefulSet) for each microservice. A Deployment defines the number of microservice replicas to run, the container image to utilize, and the configuration for the microservice.

After creating the Deployment, Kubernetes will arrange the designated number of microservice replicas to operate on nodes within the cluster. It will also supervise these replicas to guarantee their ongoing operation. If a replica malfunctions, Kubernetes will automatically initiate a restart.

2. Scaling microservices on Kubernetes

Scaling microservices on Kubernetes entails modifying the number of replicas defined in the Deployment. Augmenting the replicas permits the microservice to manage increased loads, while diminishing the replicas curtails the resources consumed by the microservice.

Additionally, Kubernetes facilitates automatic scaling of microservices predicated on CPU usage or alternative metrics provided by the application. This functionality enables the microservice to autonomously adapt to fluctuations in load sans manual intervention.

3. Monitoring microservices with Kubernetes

Monitoring microservices within a Kubernetes ecosystem entails gathering metrics from Kubernetes nodes, the Kubernetes control plane, and the microservices individually. Kubernetes furnishes intrinsic metrics for nodes and the control plane, which can be amassed and depicted utilizing tools like Prometheus and Grafana.

To monitor the applications operating within each microservice, application performance monitoring (APM) tools can be utilized to amass comprehensive performance data. These tools offer insights into service response times, error rates, and other pivotal performance metrics.

4. Debugging microservices in a Kubernetes environment

Troubleshooting microservices within a Kubernetes setup involves inspecting the logs and metrics of the microservices and potentially connecting a debugger to the active microservice.

Kubernetes offers a built-in mechanism for gathering and reviewing logs, along with metrics to aid in identifying performance bottlenecks. A recent addition is the "kubectl debug node" command, which facilitates deploying a Kubernetes pod to a specific node for troubleshooting purposes. This proves beneficial when direct access to a node via SSH is unavailable.

In cases where these tools fall short, connecting a debugger to the active microservice becomes necessary. However, this process is more intricate within a Kubernetes environment, given that microservices operate within containers across potentially numerous nodes.

5. Implementing CI/CD with Kubernetes

Kubernetes serves as a robust base for implementing CI/CD strategies for microservices. Leveraging the Kubernetes Deployment object offers a declarative approach to controlling the intended state of your microservices. This simplifies the automation of deploying, updating, and scaling your microservices. Moreover, Kubernetes features built-in support for rolling updates, enabling the gradual rollout of changes to your microservices. This mitigates the risk associated with introducing disruptive alterations.

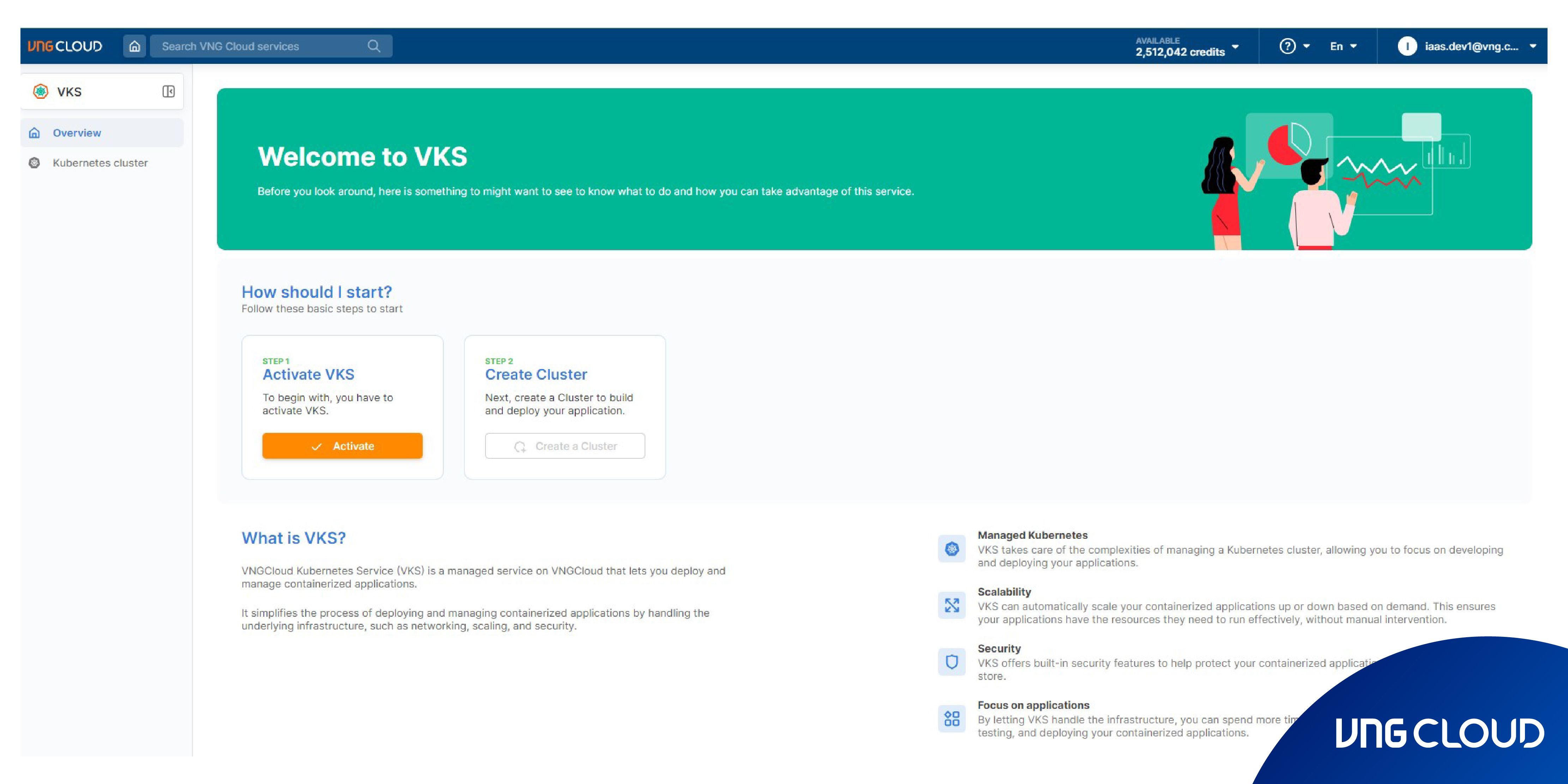

Explore the power of Microservices in Kubernetes with our new VKS (VNG Cloud Kubernetes Service)! For a more comprehensive guide, please reach out to VNG Cloud.